NextGen vanishes on front page

My NextGen gallery kept vanishing on my WordPress frontpage. It did not show as long as the page was set to front page, as soon as I picked a different front page, the images reappeared. Even when I changed the theme, the gallery disappeared.

I checked and rechecked the source code, but what I apparently missed was the gallery being wrapped in auto excerpt tags:

Redecorating the place II

Polypager’s strength clearly was in handling the database – it has foreign key capability and without the faintest complaint, Polly will display any mysql-database it is being fed. However, it was never built with serving images as a central part in mind. There is a gallery plugin in place, but my desires soon surpassed the capabilities.

Zenphoto in turn is fantastic in handling text and images (and video by the way, which surprisingly posed the biggest hurdle in wordpress – the other was 301, but in the end Tony McCreath’s redirect generator helped). The problem with zenphoto is more an aesthetic one as the available skins are limited and don’t really meet my expectations. The one I hacked together unfortunately »grew organically« over the years until recently it gracefully started falling apart.

Thus, today I make the move to WordPress and while I am at ease parting from Zenphoto, leaving Polly behind really hurts. So, thanks Nic for developing it and having me aboard, because in the process, I learned many a thing about distinguishing sensible feature requests from the other ones, about version management using svn and git, and also, in 2008, about how it feels to be at the receiving end of a proper hack.

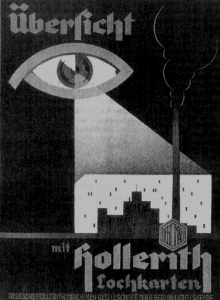

Mining Data

»Overview –

through Hollerith Punch Cards«

Motherboard has an insightful article on the history and future of Diaspora (an open source, distributed alternative to Facebook). The article is very long and alongside the Diaspora-narrative there are several other issues it focuses on. One of them deals with data mining, which – with Facebook as a regular dinner table subject – is touched upon frequently around here.

The argument I hear most often when it comes to privacy issues is »I can’t see how this bit of information could possibly be vital or interesting to a third party«. The thing with data mining is of course the three-letter-word above »argument« lacks: now.

Sooner or later someone might (or might not) come along who can see a relevance, even long after the information is in the open. And often it is not the data in itself that turns out to be explosive, but a new way in which it is connected to other harmless data. Or a new place it’s brought to.

Here’s one fun example from the Diaspora-article:

Last week, the Financial Times reported that a newly uncovered deal between Facebook and the data firm Datalogix allows the site to track whether ads seen on Facebook lead users to buy those products in stores, which is highly attractive intelligence for advertisers. (Datalogix does this by buying consumer loyalty data from retailers, and tracks in-store purchases by matching email addresses in its database to email accounts used to set up Facebook profiles, along with other account registration information.)

Alec Liu (2 Oct 2012): What Happened to the Facebook Killer? It’s Complicated. Motherboard.

The future implications of the email address mix-and-match is not fully clear yet (although for a start I think it’s of nobody’s business what I buy where). But there are other examples where the consequences are very clear. For instance someone disclosed his credit card number to both Apple and Amazon. Ultimately this led to the destruction of his digital life – email account takeover, twitter account takeover, phone wiped clean, computers wiped clean: proudly brought to you by small-scale data mining with a pinch of social engineering thrown in:

Amazon tech support gave them the ability to see a piece of information — a partial credit card number — that Apple used to release information. In short, the very four digits that Amazon considers unimportant enough to display in the clear on the web are precisely the same ones that Apple considers secure enough to perform identity verification.

Mat Honan (6 Aug 2012): How Apple and Amazon Security Flaws Led to My Epic Hacking. Wired.

And finally, my historian-friend’s favourite – and at the same time the most ghastly – data mining example of them all is how the 1933 census in Germany was later used to organise the deportation of Jews:

But Jews could not hide from millions of punch cards thudding through Hollerith machines, comparing names across generations, address changes across regions, family trees and personal data across unending registries. It did not matter that the required forms or questionnaires were filled in by leaking pens and barely sharpened pencils, only that they were later tabulated and sorted by IBM’s precision technology.

Edwin Black (2009): IBM and the Holocaust. Washington DC, p. 107.

Update 4 Aug 2013: I recently learned about the Rosa Liste (pink list). This list was kept by the German empire and subsequently the Republic of Weimar to monitor male homosexuals. In 1933 the list fell into the hands of the new government which used it to go straight from monitor to murder. Case in Point: you never know what the meaning of any given datum is going to be in the future.

Cloud a la ownCloud

Sync Contacts

- Thunderbird: Sogo Connector + »more functions for address book« (optional)

- Android: CardDAV

Sync Calendar

- Thunderbird: Sogo Connector + Lightning

- Android: CalDAV

Desktop Client

- Setting it up:

Somehow it only worked as root, chown did the trick (see here):

sudo chown -Rc USER:USER /home/USER/.local/share/data - Syncing

Syncing works pretty well – but unfortunately not well enough. I do like that one can connect random folders to sync (for example a local folder »Documents« may be called »docs« on OC). What I like not so much is that you can only run two-way-sync. There is no option »sync only from server to machine« or »sync only from machine to server«. It would be very helpful as I use Unison to sync very large parts of my hard drives among several machines – much more then I want or need in OC. Yesterday I lost some data and I think it was due to owncloud got confused with two machines syncing to it in addition to syncing directly in between each other. - Android

The oc-app allows syncing to the phone on a file-by-file basis, which is neat. It also adds an owncloud-option to the share-menu. What I find really convenient though is that owncloud allows es-file-explorer to connect.

Sharing Files

Further tutorials and resources I used

- Meinnoteblog

- Points of Interest

- SoGo

- Owncloud on Webdav and this support page (very slow at the moment)

- Jamie Flarity: Make Webdav persist in Nautilus

- Techdirt has a review of various solutions, including a selfhosted gmail style email – owncloud is missing though.

[article started in August 2012; final, rewritten version from January 2014]

.htaccess redirect with GET variable

RewriteEngine on

RewriteBase /

Then, for the actual rule there’s a lot of generally helpful stuff out there – only it didn’t help me:

- the string in question was a GET-variable as part of a dynamic URL

- the string could be in the middle of the URL or at the end

My task was to replace a chunk from a dynamic URL and leave unchanged whatever was before or after this chunk.

The example was the page you’re reading at the moment. It used to be called »Recettes« and I wanted to rename it to »Recipes«.

The overview was reached through http://www.brasserie-seul.com/?Recettes, but this article had the URL http://www.brasserie-seul.com/?Recettes&nr=60. In addition this page has groups, so there is also http://www.brasserie-seul.com/?Recettes&group=web, […]group=ubuntu etc.

It took me literally hours of research, until I finally found Carolyn Shelby’s very helpful article. Her code took me almost there:

RewriteCond %{QUERY_STRING} ^(.*)Recettes(.*)$

RewriteRule ^$ Recipes? [R=301,L]

Redecorating the Place

So after six years of tiny letters and narrow columns, I made a new skin. This is how the Brasserie looked until today:

After quiet times on this website I have felt the motivation to use it more again for a while now, especially because I never was too happy with the social networking sites big and small. I made an earlier attempt when I felt the Google already knew enough and more intolerableA FB-Stories hit the news , outweighing the major advantage: low maintenance effort with high connectivity. I finally decided to get rid of both it and some major dust at the trusty old Brasserie when I read Scott Hanselman’s »Your Words Are Wasted«:

You are pouring your words into increasingly closed and often walled gardens. You are giving control – and sometimes ownership – of your content to social media companies that will SURELY fail.

After considerable restructuring and restyling work, I hope the motivation sticks for a while!

New Xampp Security Concept

New XAMPP security concept:

Access to the requested object is only available from the local network.

This setting can be configured in the file “httpd-xampp.conf”.

If you think this is a server error, please contact the webmaster.

Error 403

It is always very helpful to a) tell people to contact somone they don’t have and b) tell them to edit a file without telling them where it is and what to edit. After some poking around and several strange black magic suggestions, I found a few sources pointing towards what also worked for me. cpighin summed it up the nicest:

Open httpd-xampp.conf in a text editor – for instance by typing

sudo gedit /opt/lampp/etc/extra/httpd-xampp.conf

Then go and find the section »since XAMPP 1.4.3«. There it should say

<Directory “/opt/lampp/phpmyadmin”>

AllowOverride AuthConfig Limit

Order allow,deny

Allow from all

</Directory>

Replace it with

<Directory “/opt/lampp/phpmyadmin”>

AllowOverride AuthConfig Limit

Require all granted

</Directory>

Then restart xampp through

sudo /opt/lampp/lampp restart

The Right Password Manager

For instance he did show an epic laxness concerning his personal data by not obeying Schofield’s 2nd law of computing. It states »data doesn’t really exist unless you have at least two copies of it.« I do obey this law by using backintime and I recommend you use something similar.

The guy also daisy-chained password-resets and email-accounts, offering a single point of entry to his digital everything. This got me thinking about my own passwords. They are generally very strong and I have a lot of different passwords. Sometimes too many different ones, so I keep forgetting which ones I used where. But sometimes not enough different ones, as some applications share some passwords. This needs to change, so it is time for a password manager.

[update 30.8.2012] I installed KeePassX a while ago and am quite happy. I thought I had found the perfect combination when Nic mentioned the open source self hosted oneCloud, but I failed. Looking for another solution, I went for ubuntu one.

Ubuntu one does not support android 1.6 any more, but ES File manager does. And it also does support ubuntu one.

[update 26.9.2019] Bill of Pixel Privacy sent me an article on passwords which has a lot of interesting facts and figures. So if you think all this doesn’t concern you, you might want to check it out: https://pixelprivacy.com/resources/reusing-passwords/

[/update]

What are the options?

- Passwordmaker

- KeepassX

for *nix-based systems

+ independent from KeePass but they share a db-format, so you can port stuff

+ GNU & Open Source - KeePass

? Any connection to KeepassX?

+ Has a portable version

+ independent from KeePassX, but uses same db-format, so you can port your stuff

– needs wine or windows - WebKeePass

Java-port of KeePass - Revelation

- Mitto

+ apparently self-hosted - Pasaffe

+ DB is Passwordsafe 3.0 compatible - Passwordgorilla

- Passwordsafe

+ DB compatible with pasaffe

At askubuntu the majority recommends KeePassX. On stackexchange WebApps there is a thread on self hosted variants.

What are my requirements?

* sufficient cryptographic algorithm – SHA1 or MD5 won’t do.

* accessible from on the road

* Open Source

[update 21.8.2012]

I am trying KeePassX at the moment. I like the interface and have already forgotten most passwords – so what I don’t like is I started relying heavily on a piece of software. Anyway, there are at least two Android clients that can read KeePassX’ data base:

- KeePassDroid

+ requires Android 1.5 und up

+ syncs to drop box

+ good reviews

+ free

+ large user base - Walletx Password Manager

+ syncs to dropbox

– requires Android 2.2 and up

– not so good reviews

– small user base

Feeds a la Tiny Tiny RSS

[update 2011-11-10] Both this and Nic’s article have been featured on tt-rss’ project site. There are a bunch of other interesting and useful articles, too, so check it if you’re interested in tt-rss. [/update]

A while ago, Google updated their reader, killing one of the best features: sharing. I looked around a bit for a suitable alternative and in the end settled with tiny, tiny rss.

Advantages:

- It runs on my server, so nothing can be done to it unless I approve

- Sharing is easy

- It’s simple

Nic, who thankfully came up with this great alternative, has a more techno-philosophical article on the switch.

Some minor issues I had while installing:

1. open_basedir not supported

Problem: during installation I got the fatal error

php.ini: open_basedir is not supported

Solution: Open <tt-rss-root>/sanity_check.php and find the entry Update: in recent versions it’s in <root/include>

if (ini_get(“open_basedir”)) { $err_msg = “php.ini: open_basedir is not supported.”; }

World Wide Food Chain

As soon as the smart guys make the security hole public, the swarm of pimple stricken milksops with teenage angst who read what the smart guys publish come marauding. They upload a bit of this, toy around a bit in that and then claim they »hacked« something while they really only hacked their pants once more.

This way the smart guys don’t have to bother telling the people about their security holes (probably assuming backup copies and a restore script anyway; if this doesn’t exist – more valuable lessons learned): they have their monkey baggage to do so for them.